When Employees Train Models on Company Data: Ownership and Consent

Artificial intelligence is transforming workplaces, but it also raises complex ownership and consent questions. In the U.S., when employees use company data to train machine learning or large language models, who owns the resulting weights, datasets, and outputs? Can an employer claim full control, or do employees retain certain rights? This guide explores the legal principles, policy best practices, and real-world implications of AI model ownership in employment settings.

Why AI Ownership Matters More Than Ever

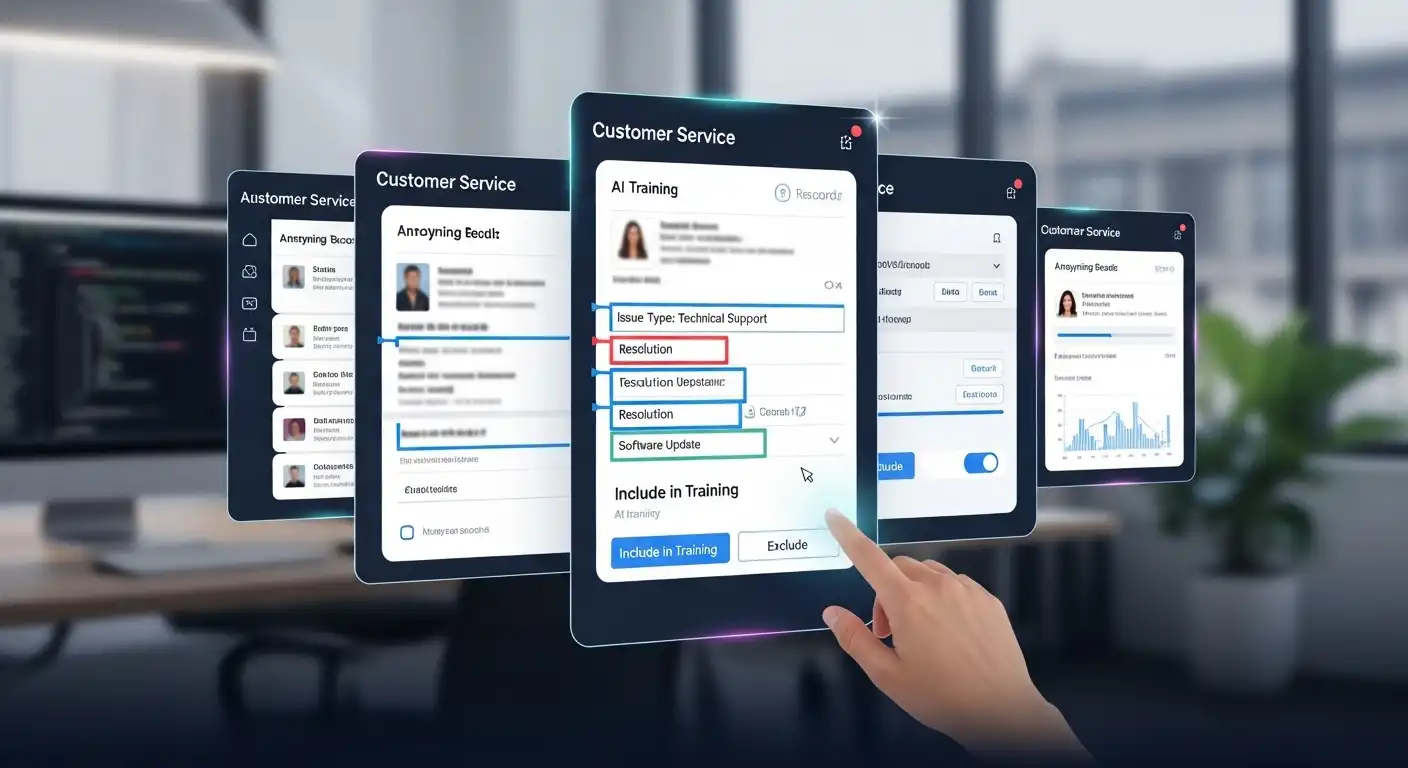

Businesses now embed AI in everything—from customer service bots to predictive analytics. But behind every model lies valuable intellectual property and sensitive data. When employees experiment with training or fine-tuning AI using internal data, they may inadvertently create assets that don’t fit cleanly into existing IP categories.

In 2025, organizations face a new class of risk: internal models that contain proprietary datasets, private information, or even copyrighted material. Determining who owns the model and who has permission to use the data isn’t just a technical matter—it’s a legal necessity.

Ownership defines who can monetize, license, or modify the model. Consent defines whose data can be used and under what conditions. Without clarity, disputes over AI assets can easily escalate into intellectual property litigation or trade secret violations.

Understanding the Building Blocks: Data, Model, and Weights

Before we tackle ownership, it’s vital to understand what employees actually create when training models:

- Training Data: The raw or curated information—text, images, code, or records—used to teach the model patterns.

- Model Architecture: The algorithmic framework (e.g., GPT, BERT, ResNet) that defines how data flows and transforms.

- Weights and Parameters: Numerical representations that encode knowledge gained during training.

- Outputs: The text, images, or insights the model generates after training.

Each component may be subject to different ownership and consent requirements. Training data could involve third-party licenses; model weights might qualify as trade secrets; outputs may be new creative works. This complexity is why ownership cannot be assumed—it must be explicitly defined.

U.S. Legal Framework: Who Owns the Model?

Work Made for Hire Doctrine

Under the U.S. Copyright Act, 17 U.S.C. § 101, works created by employees within the scope of their employment are automatically owned by the employer as “works made for hire.” That typically covers software, documentation, and training code.

Under the U.S. Copyright Act, 17 U.S.C. § 101, works created by employees within the scope of their employment are automatically owned by the employer as “works made for hire.” That typically covers software, documentation, and training code.

However, AI complicates things. Model weights—mathematical matrices that emerge through training—may not always fit traditional “authorship” definitions. Courts are still developing interpretations for whether these elements qualify for copyright or trade secret protection.

Employment and IP Assignment Agreements

Most U.S. companies rely on IP assignment clauses in employment contracts. These clauses ensure that any inventions, code, or models created within the scope of employment belong to the company.

Yet problems arise when employees train models using both company and personal resources. For example, an engineer might fine-tune an open-source model at home using proprietary data. Without clear agreements, disputes can arise over whether the resulting model is corporate property or a personal project.

Trade Secret Protection

Many organizations protect model weights and training datasets as trade secrets under the Defend Trade Secrets Act (DTSA). To qualify, information must have economic value from not being publicly known and must be subject to reasonable efforts to maintain secrecy—such as limiting access, using NDAs, and logging training activity.

If employees share model artifacts outside authorized channels or upload them to public repositories, that protection can be lost instantly.

Consent: Data Rights and Privacy Obligations

Ownership covers who controls the model; consent governs whether data was used legally in the first place. Companies must ensure that data used for training was collected, processed, and repurposed with valid authorization.

Employee Data

If internal HR data, chat logs, or emails are used to train a model, the company must consider state privacy laws like the California Consumer Privacy Act (CCPA) and the Illinois Biometric Information Privacy Act (BIPA). Employees have the right to know how their information is used, even for internal AI development.

Customer Data

Customer Data

Customer data typically falls under privacy notices and contracts. If the company plans to use customer communications or behavior data for AI training, disclosure is essential. Otherwise, the company risks breach-of-contract and privacy claims.

Third-Party Data

External datasets often come with license terms restricting AI training. Violating those terms can lead to costly infringement suits. Always confirm whether data sources are open for training and whether attribution or compensation is required.

For example, the U.S. Copyright Office continues to evaluate how data rights intersect with AI training, underscoring the legal gray areas organizations face.

Defining Company vs. Employee Rights

The Employer’s Perspective

Employers typically assume that anything produced on company time or with company resources belongs to them. That’s reasonable but not absolute. For full ownership, companies should establish formal AI policies that specify:

- Authorized training platforms and datasets

- Permitted open-source tools and model licenses

- Rules for employee experimentation and side projects

- Procedures for logging, reviewing, and archiving training sessions

The Employee’s Perspective

Employees often assume that side projects or home experiments are personal property, even if inspired by work. Without clear agreements, this assumption can lead to ownership disputes. For instance, if an employee uses company data to improve an open-source model, the resulting weights may constitute a “derivative work” owned by the employer.

The safest approach is transparency: declare any AI-related side projects that might overlap with company resources or data. HR and legal teams can then determine ownership boundaries before conflicts arise.

The Role of NDAs and Confidentiality Agreements

Non-Disclosure Agreements (NDAs) are critical for protecting trade secrets and confidential training data. When employees or contractors train models, NDAs should extend to the resulting weights and datasets. That means employees must agree not to share, replicate, or upload trained models to unapproved cloud platforms or repositories.

Contracts should explicitly define “AI assets” as including models, weights, training data, prompts, and outputs. This prevents ambiguity if an employee departs or collaborates externally.

Building a Responsible AI Policy

Ownership Clauses

Every AI policy should state that all models, datasets, and outputs created in the scope of employment or with company data are company property. Include language like:

“Any machine learning model, training dataset, or derivative work developed using company resources or data shall be deemed a Company AI Asset, fully owned by the Company.”

Consent Procedures

Consent Procedures

Consent management should be an integral part of AI governance. Before any dataset is used for training, confirm its origin, permissions, and sensitivity. Maintain audit trails showing that consent was obtained where necessary.

Access Control

Restrict who can initiate training runs, download models, or access sensitive data. Implement two-person approvals for projects involving personal or confidential datasets.

Model Documentation

Create a “Model Bill of Materials” (mBoM) for every training project. It should list:

- Base model and version

- Data sources and licenses

- Training scripts and hyperparameters

- Weights, checkpoints, and access logs

This documentation supports ownership claims and compliance reviews.

Contractors, Vendors, and Outsourced Teams

When external contractors or vendors participate in training, ownership becomes even more complex. Contracts must include:

- Explicit IP assignment to the hiring company

- Confidentiality and return-of-materials clauses

- Assurances that third-party data or models are properly licensed

Without these clauses, contractors may retain partial rights or inadvertently violate third-party terms, putting your company at risk.

When Things Go Wrong: Common Disputes

Employee Departure

Disputes often arise when key engineers leave and take trained models or datasets with them. The company must prove ownership and that the model qualifies as a trade secret. Well-maintained logs and signed agreements make all the difference.

Unauthorized Model Uploads

If an employee uploads company-trained models to public repositories like Hugging Face or GitHub, it can trigger loss of trade secret protection or even data privacy violations. A rapid takedown response plan should be in place.

Dual Use of Open Models

Using open-source models under restrictive licenses can also create problems. If company data is used to fine-tune a model with a “non-commercial use” license, the resulting model might not be usable in production without violating terms.

Real-World Case Studies and Lessons

The Getty Images vs. Stability AI Dispute

In 2024, Getty Images sued Stability AI for allegedly training its models using copyrighted images without consent. Although this case involved a commercial model developer, it illustrates how using unlicensed data can lead to massive liability. Even internal training can raise similar questions if company data contains third-party content.

The OpenAI Copyright Suits

Authors and developers have sued OpenAI, alleging their copyrighted works were used for model training without permission. These cases may shape how courts define “fair use” in the context of AI. Employers must watch these developments closely to understand how employee training practices may be evaluated in court.

Internal Corporate Disputes

Some tech firms have already faced disputes over AI model ownership after employees left to launch startups. The common thread? Lack of clear agreements defining what constitutes a company AI asset.

Steps Companies Can Take Now

- Update Employment Contracts: Include AI-specific IP assignment and confidentiality clauses.

- Implement an AI Governance Policy: Define who can train models, what data can be used, and how ownership is tracked.

- Educate Employees: Provide regular briefings on data rights, trade secrets, and ethical AI practices.

- Log Every Training Session: Maintain digital records of all model training runs, datasets, and weights.

- Conduct Privacy Reviews: Evaluate datasets for personally identifiable information (PII) and ensure compliance with privacy laws.

For Employees: Protecting Yourself and Staying Compliant

Employees can avoid conflict by following a few best practices:

- Never train models on company data without explicit authorization.

- Keep personal AI projects separate from company resources and accounts.

- Understand your employment contract’s IP ownership clauses.

- Document your contributions clearly to prevent misattribution.

Transparency and communication with legal and HR teams go a long way toward preventing misunderstandings.

The Future of Ownership and Consent in AI Workplaces

As generative AI becomes standard across industries, defining ownership boundaries will be as essential as cybersecurity. Policymakers are still catching up, and courts will continue shaping precedents. Companies that act proactively—through contracts, policies, and transparency—can protect both innovation and compliance.

In the long run, ethical and legally compliant AI training practices will build trust, safeguard intellectual property, and prevent the next generation of data-related disputes.

Key Takeaways

- Ownership: Use IP assignment and “work made for hire” clauses to secure company rights.

- Consent: Verify data permissions before any training begins.

- Documentation: Keep detailed logs of model architectures, datasets, and contributors.

- Education: Train teams on AI ethics, trade secrets, and data compliance.

- Policy: Adopt clear governance frameworks for all internal AI initiatives.

Conclusion

When employees train AI models on company data, the result can be groundbreaking innovation—or a legal gray zone. Ownership and consent determine which outcome prevails. Companies that clarify these boundaries early, through thoughtful contracts and transparent governance, protect not only their assets but also their workforce.

By treating AI models as formal intellectual property, documenting consent, and maintaining confidentiality, organizations can lead responsibly in an increasingly AI-driven economy.

External reference: For ongoing updates, visit the U.S. Copyright Office’s Artificial Intelligence Policy Page.

Customer Data

Customer Data Consent Procedures

Consent Procedures